What is BAML?

The best way to understand BAML and its developer experience is to see it live in a demo (see below).

Demo video

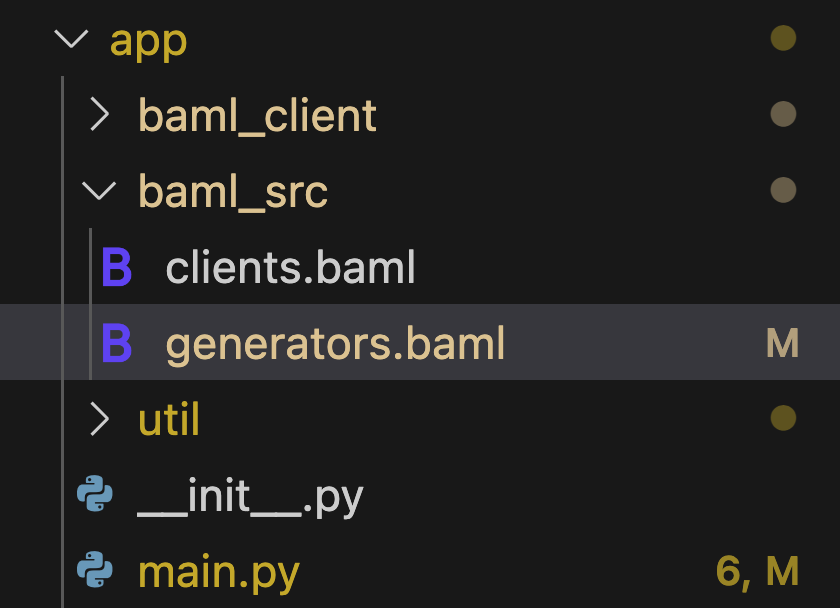

Here we write a BAML function definition, and then call it from a Python script.

Examples

High-level Developer Flow

Continue on to the Installation Guides for your language to setup BAML in a few minutes!

You don’t need to migrate 100% of your LLM code to BAML in one go! It works along-side any existing LLM framework.