Prompting in BAML

We recommend reading the installation instructions first

BAML functions are special definitions that get converted into real code (Python, TS, etc) that calls LLMs. Think of them as a way to define AI-powered functions that are type-safe and easy to use in your application.

What BAML Functions Actually Do

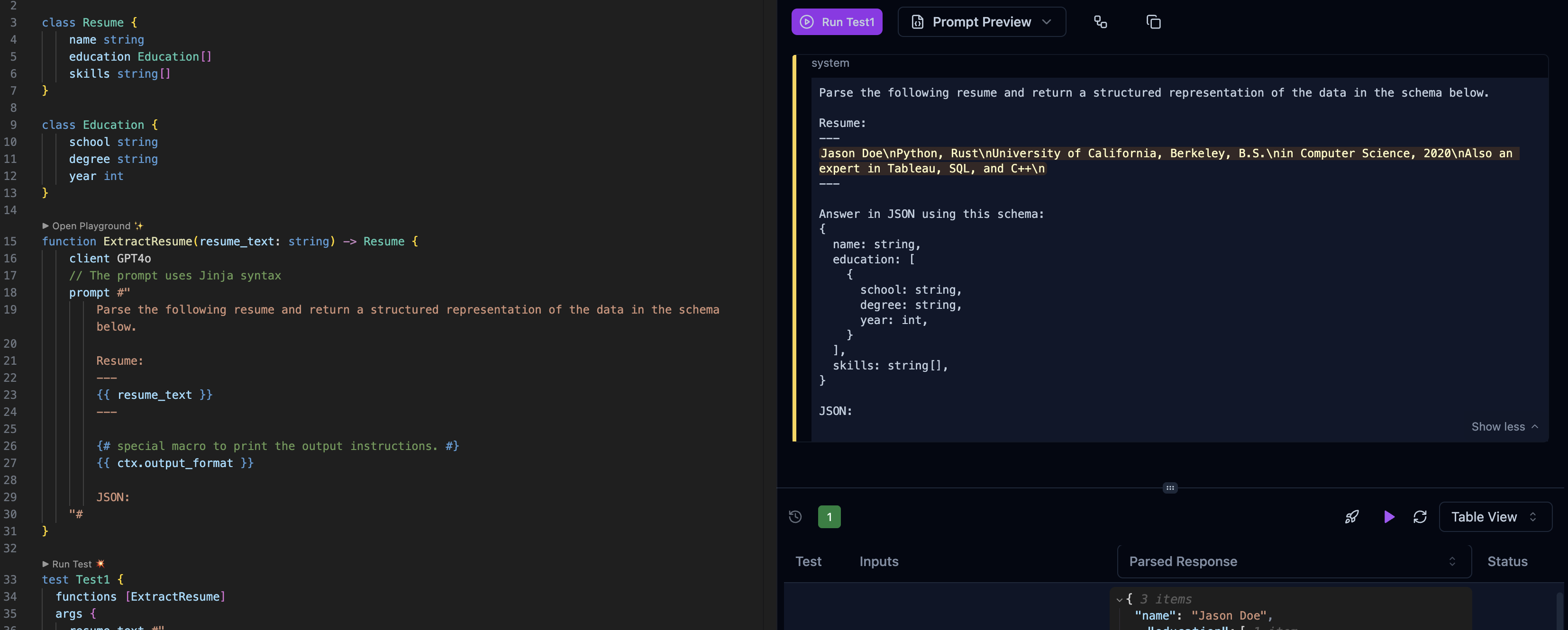

When you write a BAML function like this:

BAML converts it into code that:

- Takes your input (

resume_text) - Sends a request to OpenAI’s GPT-4 API with your prompt.

- Parses the JSON response into your

Resumetype - Returns a type-safe object you can use in your code

Prompt Preview + seeing the CURL request

For maximum transparency, you can see the API request BAML makes to the LLM provider using the VSCode extension. Below you can see the Prompt Preview, where you see the full rendered prompt (once you add a test case):

Note how the {{ ctx.output_format }} macro is replaced with the output schema instructions.

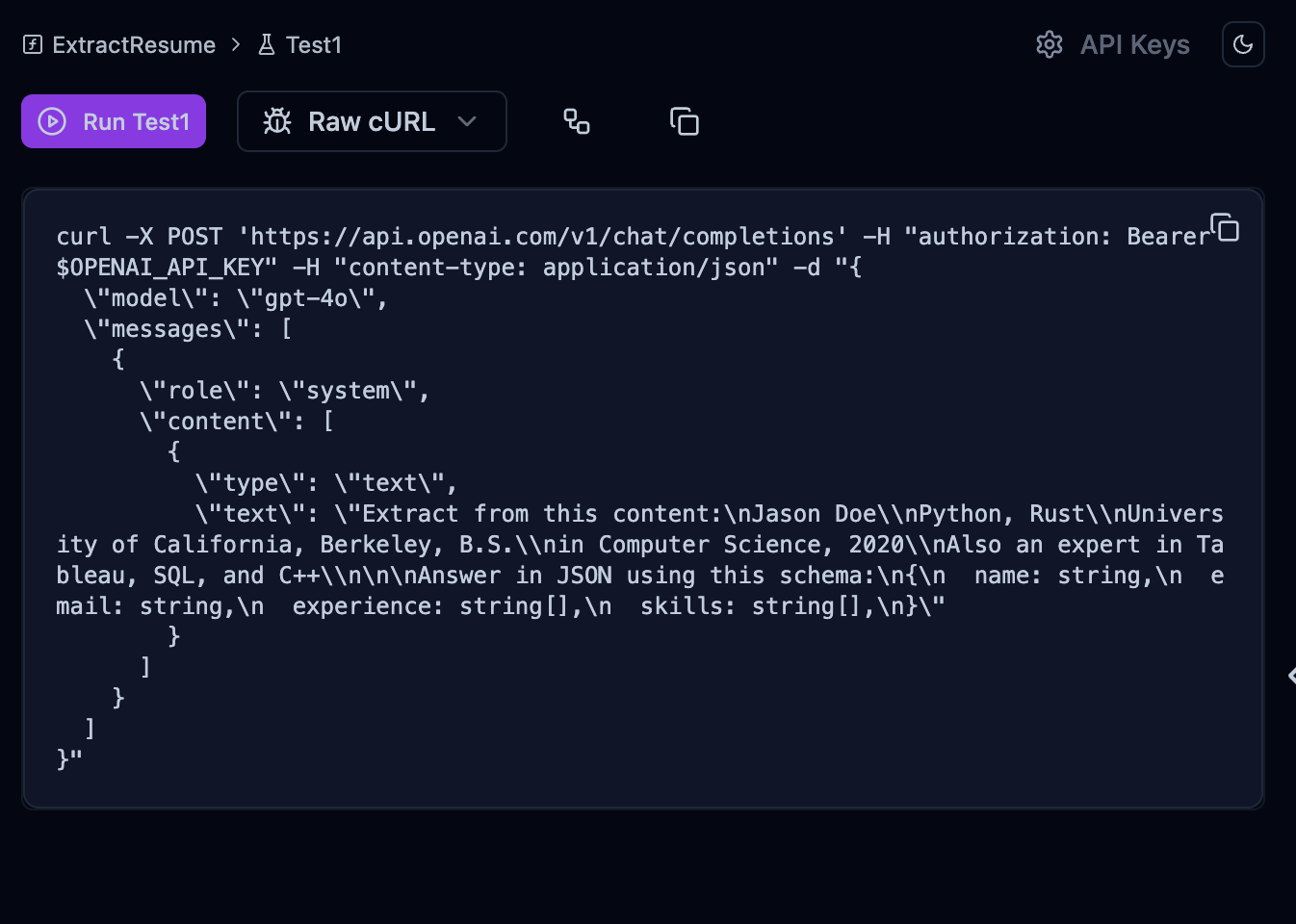

The Playground will also show you the Raw CURL request (switch from “Prompt Review” to “Raw cURL”):

Always include the {{ ctx.output_format }} macro in your prompt. This injects your output schema into the prompt, which helps the LLM output the right thing. You can also customize what it prints.

One of our design philosophies is to never hide the prompt from you. You control and can always see the entire prompt.

Calling the function

Recall that BAML will generate a baml_client directory in the language of your choice using the parameters in your generator config. This contains the function and types you defined.

Now we can call the function, which will make a request to the LLM and return the Resume object:

Do not modify any code inside baml_client, as it’s autogenerated.

Next steps

Checkout PromptFiddle to see various interactive BAML function examples or view the example prompts

Read the next guide to learn more about choosing different LLM providers and running tests in the VSCode extension.