Chain-of-Thought Prompting

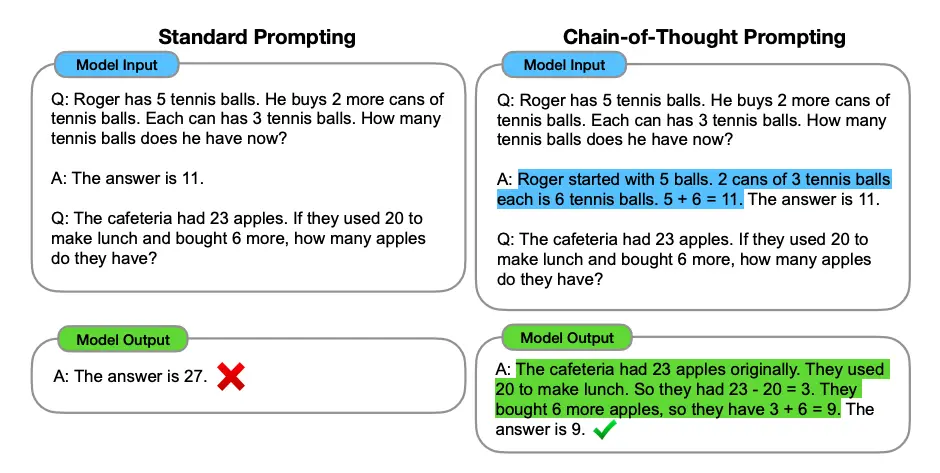

Chain-of-thought prompting is a technique that encourages the language model to think step by step, reasoning through the problem before providing an answer. This can improve the quality of the response and make it easier to understand.

Chain-of-Thought Prompting Wei et al. (2022)

There are a few different ways to implement chain-of-thought prompting, especially for structured outputs.

- Require the model to reason before outputting the structured object.

- Bonus: Use a

template_stringto embed the reasoning into multiple functions.

- Bonus: Use a

- Require the model to flexibly reason before outputting the structured object.

- Embed reasoning in the structured object.

- Ask the model to embed reasoning as comments in the structured object.

Let’s look at an example of each of these.

We recommend Technique 2 for most use cases. But each technique has its own trade-offs, so please try them out and see which one works best for your use case.

Since BAML leverages Schema-Aligned Parsing (SAP) instead of JSON.parse or LLM modification (like constrained generation or structured outputs), we can do all of the above techniques with any language model!

Technique 1: Reasoning before outputting the structured object

In the below example, we use chain of thought prompting to extract information from an email.

Reusable Chain-of-Thought Snippets

You may want to reuse the same technique for multiple functions. Consider template_string!

Technique 2: Allowing for flexible reasoning

This is one we recommend for most use cases.

The benefit of using - ... is that we allow the model to know it needs to output some information, but we don’t limit it to a specific format or inject any bias by adding example text that may not be relevant.

Similarly, we use ... after two - ... to indicate that we don’t mean to limit the number of items to 2.