Retrieval-Augmented Generation (RAG)

RAG is a commonly used technique used to improve the quality of LLM-generated responses by grounding the model on external sources of knowledge. In this example, we’ll use BAML to manage the prompts for a RAG pipeline.

Creating BAML functions

The most common way to implement RAG is to use a vector store that contains embeddings of the data. First, let’s define our BAML model for RAG.

BAML Code

Note how in the TestMissingContext test, the model correctly says that it doesn’t know the answer

because it’s not provided in the context. The model doesn’t make up an answer, because of the way

we’ve written the prompt.

You can generate the BAML client code for this prompt by running baml-cli generate.

Creating a VectorStore

Next, let’s create our own minimal vector store and retriever using scikit-learn.

Python Code

We can then build our RAG application in Python by calling the BAML client.

When you run the Python script, you should see output like the following:

Once again, in the last question, the model correctly says that it doesn’t know the answer because it’s not provided in the context.

That’s it! You can now attempt such a RAG workflow with a vector database on a larger dataset. All you have to do is point BAML to the retriever class you’ve implemented.

Creating Citations with LLM

In this advanced section, we’ll explore how to enhance our RAG implementation to include citations for the generated responses. This is particularly useful when you need to track the source of information in the generated responses.

First, let’s extend our BAML model to support citations. We’ll create a new response type and function that explicitly handles citations:

Let’s add a test to verify our citation functionality:

This test will demonstrate how the model:

- Provides relevant information about SpaceX and its founder

- Includes the exact source quotes in the citations array

- Only uses information that’s actually present in the context

To use this enhanced RAG implementation in our Python code, we simply need to update our loop to use the new RAGWithCitations function:

When you run this modified code, you’ll see responses that include both answers and their supporting citations. For example:

Notice how each piece of information in the answer is backed by a specific citation from the source context. This makes the responses more transparent and verifiable, which is especially important in applications where the source of information matters.

Using Pinecone as Vector Database

Instead of using our custom vector store, we can use Pinecone, a production-ready vector database. Here’s how to implement the same RAG pipeline using Pinecone:

First, install the required packages:

Now, let’s modify our Python code to use Pinecone:

The key differences when using Pinecone are:

- Documents are stored in Pinecone’s serverless infrastructure on AWS instead of in memory

- We can persist our vector database across sessions

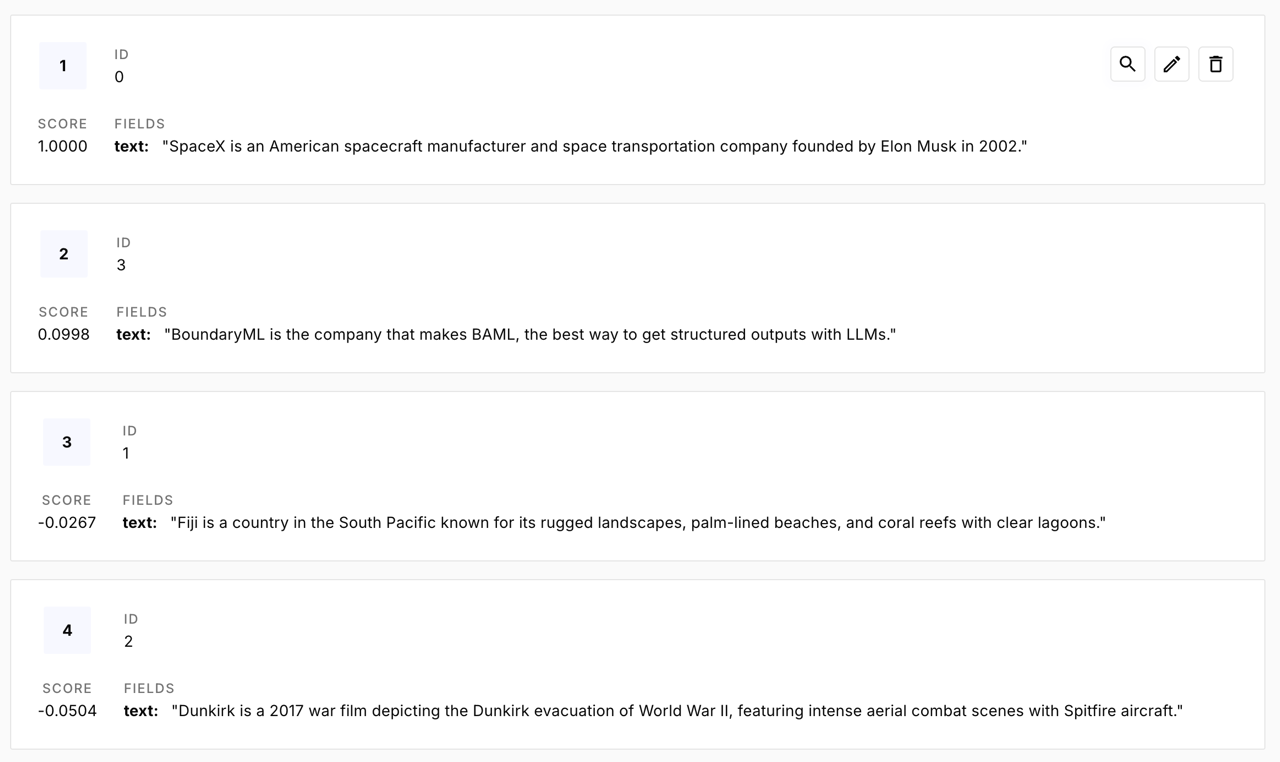

Here is a snapshot of the entriies in our Pinecone database console:

Note that you’ll need to:

- Create a Pinecone account

- Get your API key from the Pinecone console

- Replace

YOUR_API_KEYwith your actual Pinecone credentials - Make sure you have access to the serverless offering in your Pinecone account

The BAML functions (RAG and RAGWithCitations) remain exactly the same, demonstrating how BAML cleanly separates the prompt engineering from the implementation details of your vector database.

When you run this code, you’ll get the same type of responses as before, but now you’re using a production-ready serverless vector database that can scale automatically based on your usage.