Tools / Function Calling

“Function calling” is a technique for getting an LLM to choose a function to call for you.

The way it works is:

- You define a task with certain function(s)

- Ask the LLM to choose which function to call

- Get the function parameters from the LLM for the appropriate function it choose

- Call the functions in your code with those parameters

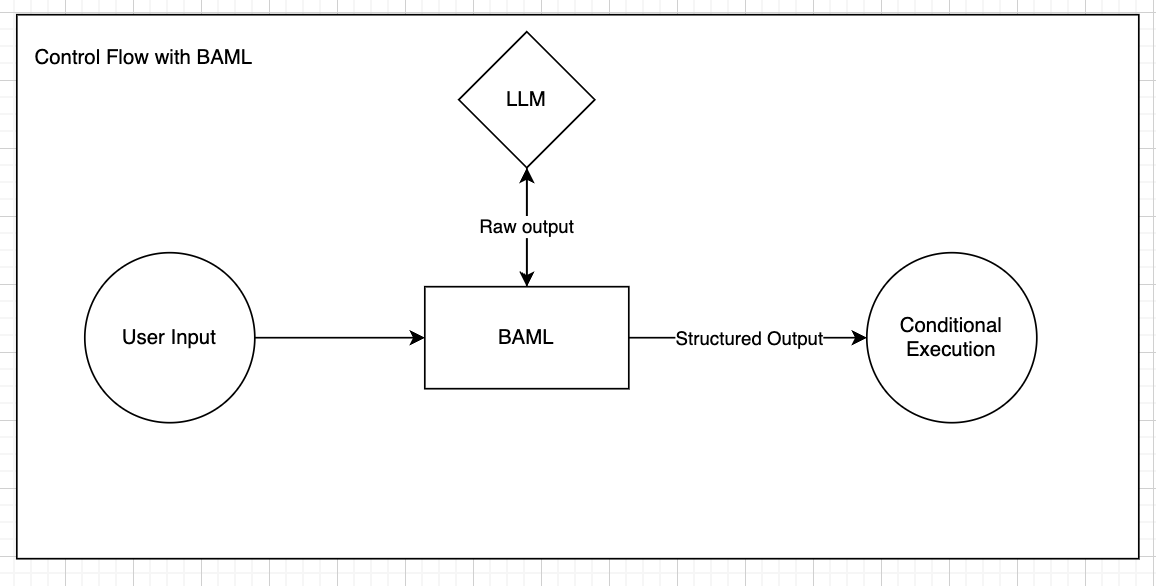

It’s common for people to think of “function calling” or “tool use” separately from “structured outputs” (even OpenAI has separate parameters for them), but at BAML, we think it’s simpler and more impactful to think of them equivalently. This is because, at the end of the day, you are looking to get something processable back from your LLM. Whether it’s extracting data from a document or calling the Weather API, you need a standard representation of that output, which is where BAML lives.

In BAML, you can get represent a tool or a function you want to call as a BAML class, and make the function output be that class definition.

Call the function like this:

Choosing multiple Tools

To choose ONE tool out of many, you can use a union:

Call the function like this:

Choosing N Tools

To choose many tools, you can use a union of a list:

Call the function like this:

Disambiguating Between Similar Tools

When building functions that can call multiple tools (represented as BAML classes), you might encounter situations where different tools accept arguments with the same name. For instance, consider GetWeather and GetTimezone classes, both taking a city: string argument. How does the system determine whether a user query like “What’s the time in London?” corresponds to GetTimezone or potentially GetWeather?

You can use string literals to solve this problem:

Dynamically Generate the tool signature

It might be cumbersome to define schemas in baml and code, so you can define them from code as well. Read more about dynamic types here

Call the function like this:

Function-calling APIs vs Prompting

Injecting your function schemas into the prompt, as BAML does, outperforms function-calling across all benchmarks for major providers (see our Berkeley FC Benchmark results with BAML).

Amongst other limitations, function-calling APIs will at times:

- Return a schema when you don’t want any (you want an error)

- Not work for tools with more than 100 parameters.

- Use many more tokens than prompting.

Keep in mind that “JSON mode” is nearly the same thing as “prompting”, but it enforces the LLM response is ONLY a JSON blob. BAML does not use JSON mode since it allows developers to use better prompting techniques like chain-of-thought, to allow the LLM to express its reasoning before printing out the actual schema. BAML’s parser can find the json schema(s) out of free-form text for you. Read more about different approaches to structured generation here

BAML will still support native function-calling APIs in the future (please let us know more about your use-case so we can prioritize accordingly)

Create an Agent that utilizes these Tools

We can create an Agent or an “agentic loop” that continuously uses tools in a program simply by adding a while loop in our code. In this example, we’ll have two tools:

- An API that queries the weather.

- An API that does basic calculations on numbers.

This is what it looks in the BAML file:

In our agent code, we’ll:

- Implement our APIs

- Implement our Agent that continuously will use different tools

We can test this by asking things like:

- What is the weather in Seattle?

- What’s 5+2?

This is the output: