Comparing OpenAI SDK

OpenAI SDK now supports structured outputs natively, making it easier than ever to get typed responses from GPT models.

Let’s explore how this works in practice and where you might hit limitations.

Why working with LLMs requires more than just OpenAI SDK

OpenAI’s structured outputs look fantastic at first:

Simple and type-safe! Let’s add education to make it more realistic:

Still works! But let’s dig deeper…

The prompt mystery

Your extraction works 90% of the time, but fails on certain resumes. You need to debug:

You start experimenting with system messages:

Classification without context

Now you need to classify resumes by seniority:

But the model doesn’t know what these levels mean! You try adding a docstring:

But docstrings aren’t sent to the model. So you resort to prompt engineering:

Now your business logic is split between types and prompts…

The vendor lock-in problem

Your team wants to experiment with Claude for better reasoning:

Testing and token tracking

You want to test your extraction and track costs:

Production complexity creep

As your app scales, you need:

- Retry logic for rate limits

- Fallback to GPT-3.5 when GPT-4 is down

- A/B testing different prompts

- Structured logging for debugging

Your code evolves:

The simple API is now buried in error handling and logging.

Enter BAML

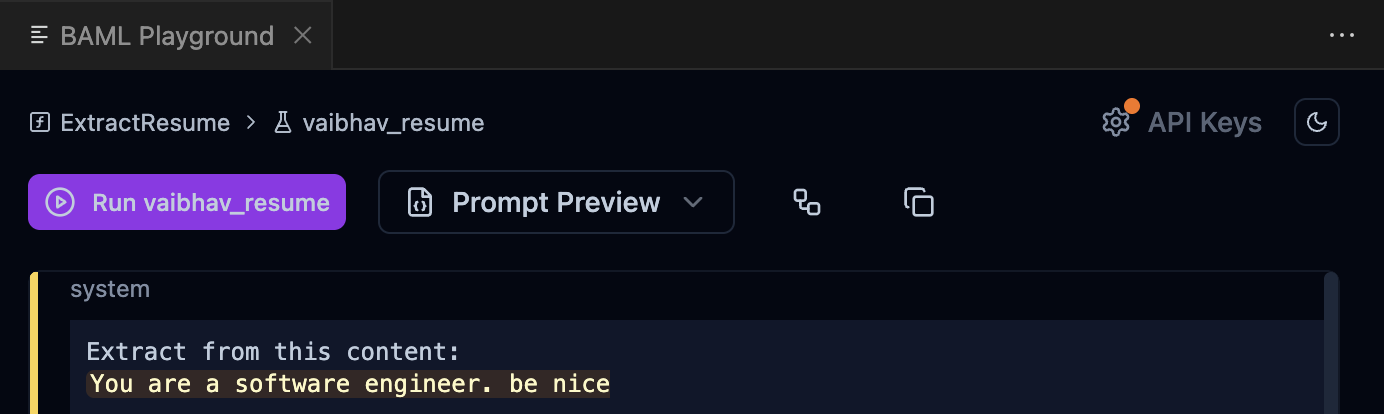

BAML was built for real-world LLM applications. Here’s the same resume extraction:

See the difference?

- The prompt is explicit - No guessing what’s sent

- Enums have descriptions - Built into the type system

- One place for everything - Types and prompts together

Multi-model freedom

In Python:

Testing without burning money

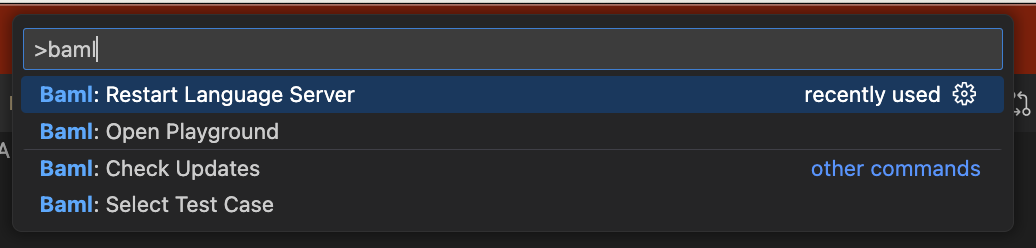

With BAML’s VSCode extension:

- Write your test cases - Visual interface for test data

- See the exact prompt - No hidden abstractions

- Test instantly without API calls

- Iterate until perfect - Instant feedback loop

- Save test cases for CI/CD

No mocking, no token costs, real testing.

Built for production

All the production concerns handled declaratively.

The bottom line

OpenAI’s structured outputs are great if you:

- Only use OpenAI models

- Don’t need prompt customization

- Have simple extraction needs

But production LLM applications need more:

BAML’s advantages over OpenAI SDK:

- Model flexibility - Works with GPT, Claude, Gemini, Llama, and any future model

- Prompt transparency - See and optimize exactly what’s sent to the LLM

- Real testing - Test in VSCode without burning tokens or API calls

- Production features - Built-in retries, fallbacks, and smart routing

- Cost optimization - Understand token usage and optimize prompts

- Schema-Aligned Parsing - Get structured outputs from any model, not just OpenAI

- Streaming + Structure - Stream structured data with loading bars

Why this matters:

- Future-proof - Never get locked into one model provider

- Faster development - Instant testing and iteration in your editor

- Better reliability - Built-in error handling and fallback strategies

- Team productivity - Prompts are versioned, testable code

- Cost control - Optimize token usage across different models

With BAML, you get all the benefits of OpenAI’s structured outputs plus the flexibility and control needed for production applications.

Limitations of BAML

BAML has some limitations:

- It’s a new language (though easy to learn)

- Best experience needs VSCode

- Focused on structured extraction

If you’re building a simple OpenAI-only prototype, the OpenAI SDK is fine. If you’re building production LLM features that need to scale, try BAML.