Why BAML?

Let’s say you want to extract structured data from resumes. It starts simple enough…

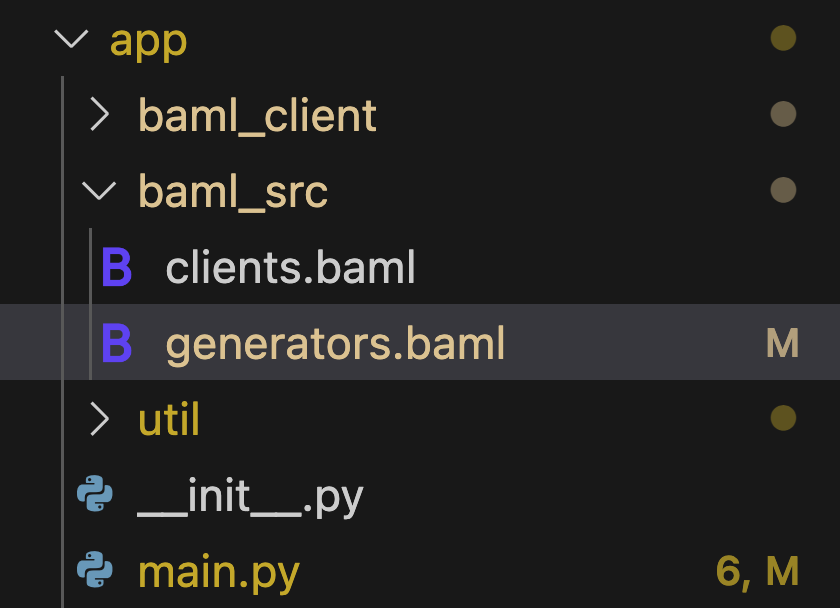

But first, let’s see where we’re going with this story:

BAML: What it is and how it helps - see the full developer experience

It starts simple

You begin with a basic LLM call to extract a name and skills:

This works… sometimes. But you need structured data, not free text.

You need structure

So you try JSON mode and add Pydantic for validation:

Better! But now you need more fields. You add education, experience, and location:

The prompt gets longer and more complex. But wait - how do you test this without burning tokens?

Testing becomes expensive

Every test costs money and takes time:

You try mocking, but then you’re not testing your actual extraction logic. Your prompt could be completely broken and tests would still pass.

Error handling nightmare

Real resumes break your extraction. The LLM returns malformed JSON:

You add retry logic, JSON fixing, error handling:

Your simple extraction function is now 50+ lines of infrastructure code.

Multi-model chaos

Your company wants to use Claude for some tasks (better reasoning) and GPT-4-mini for others (cost savings):

Each provider has different APIs, different response formats, different capabilities. Your code becomes a mess of if/else statements.

The prompt mystery

Your extraction fails on certain resumes. You need to debug, but what was actually sent to the LLM?

You start adding logging, token counting, prompt inspection tools…

Classification gets complex

Now you need to classify seniority levels:

But the LLM doesn’t know what these levels mean! You update the prompt:

Your prompt is getting huge and your business logic is scattered between code and strings.

Production deployment headaches

In production, you need:

- Retry policies for rate limits

- Fallback models when primary is down

- Cost tracking and optimization

- Error monitoring and alerting

- A/B testing different prompts

Your simple extraction function becomes a complex service:

Enter BAML

What if you could go back to something simple, but keep all the power?

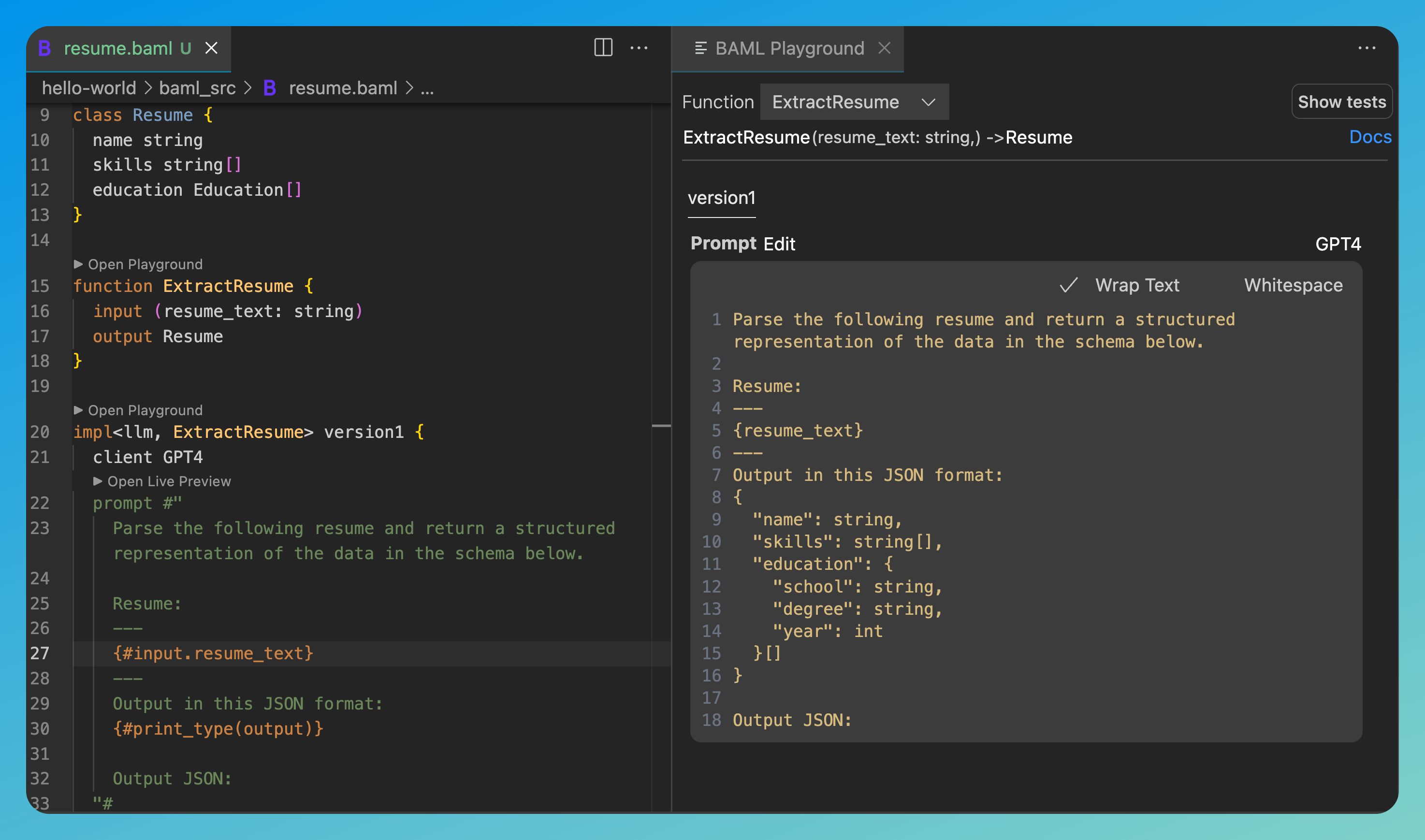

Look what you get immediately:

BAML playground showing successful resume extraction with clear prompts and structured output

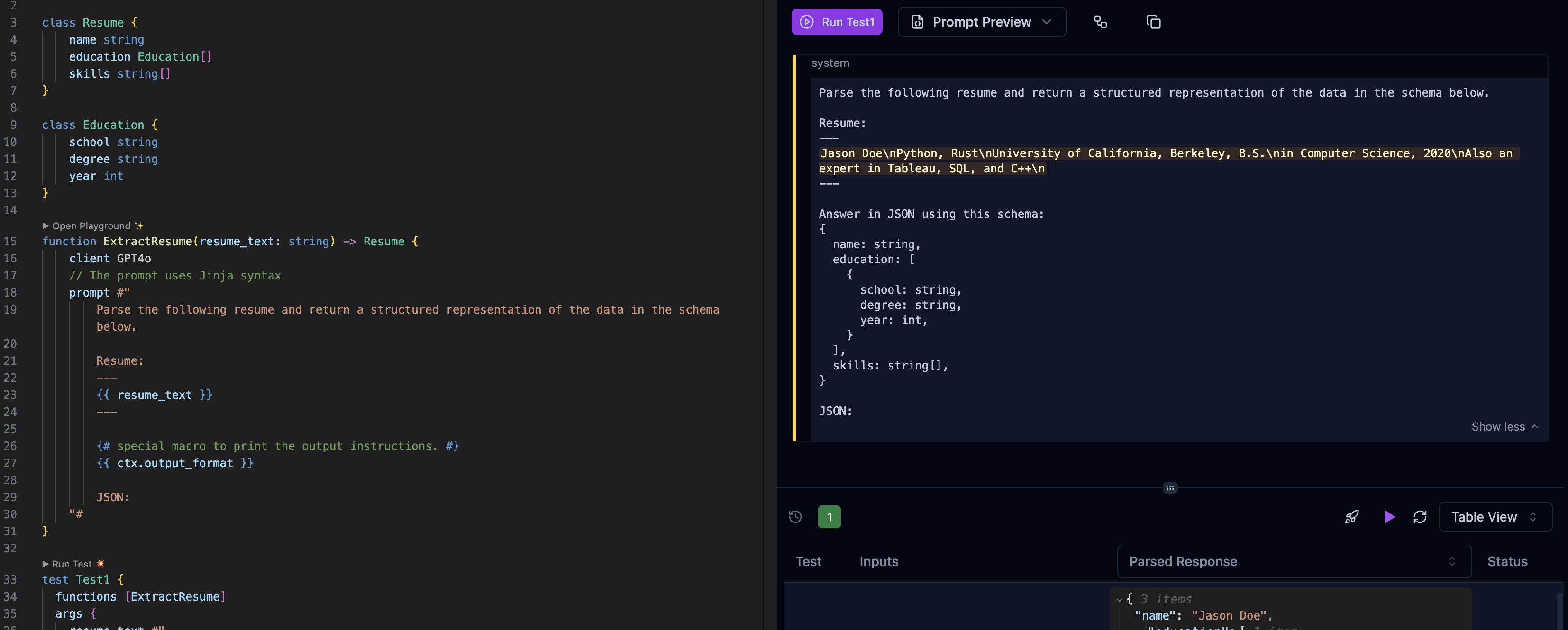

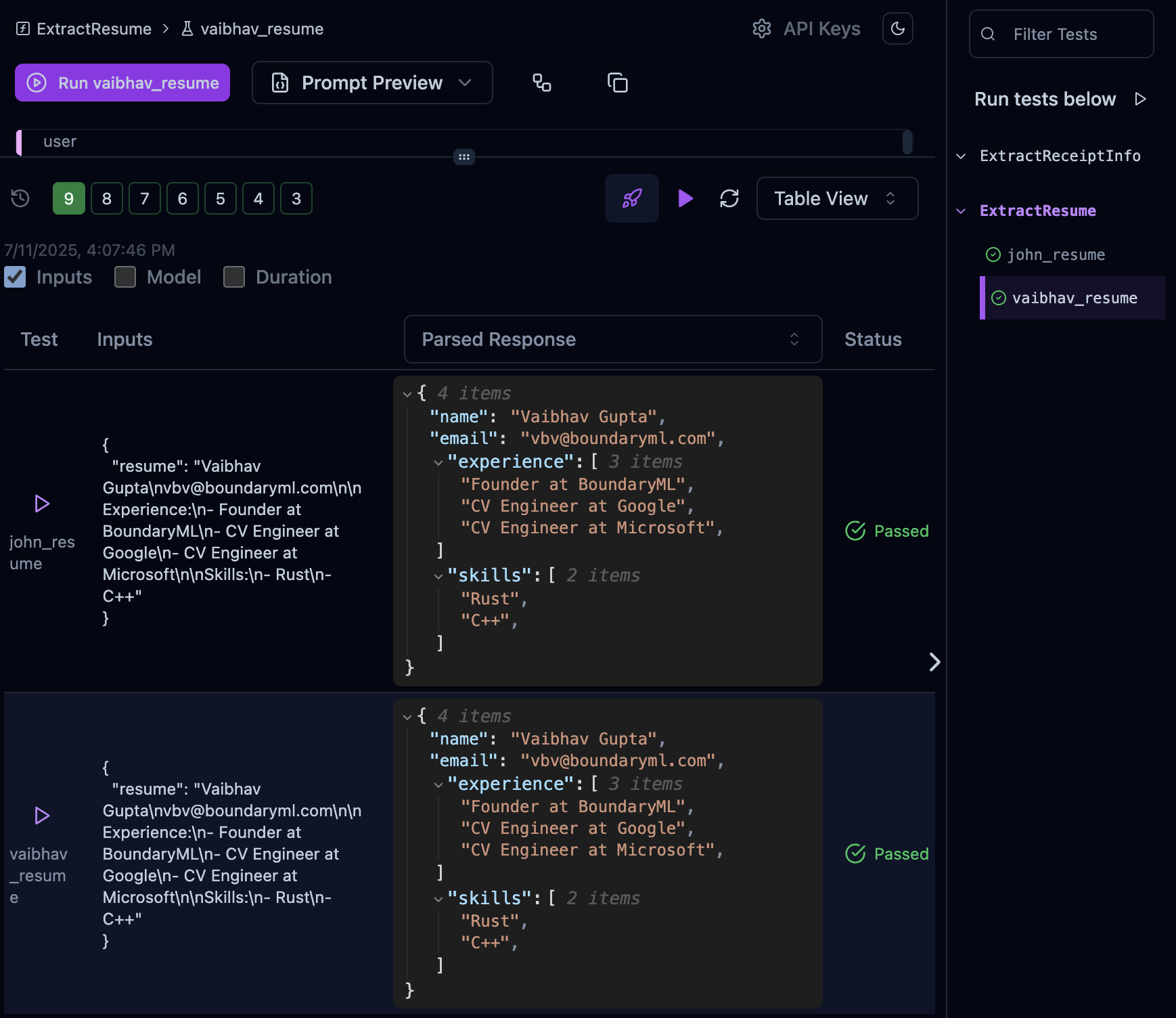

1. Instant Testing

Test in VSCode playground without API calls or token costs:

- See the exact prompt that will be sent to the LLM

- Test with real data instantly - no API calls needed

- Save test cases for regression testing

- Visual prompt preview shows token usage and formatting

Build up a library of test cases that run instantly

2. Multi-Model Made Simple

3. Schema-Aligned Parsing (SAP)

BAML’s breakthrough innovation follows Postel’s Law: “Be conservative in what you do, be liberal in what you accept from others.”

Instead of rejecting imperfect outputs, SAP actively transforms them to match your schema using custom edit distance algorithms.

Performance Comparison

Error Correction

Token Efficiency

Chain-of-Thought

SAP vs Other Approaches:

Key insight: SAP + GPT-3.5 turbo beats GPT-4o + structured outputs, saving you money while improving accuracy.

4. Production Features Built-In

5. Token Optimization

- See exact token usage for every call

- BAML’s schema format uses 80% fewer tokens than JSON Schema

- Optimize prompts with instant feedback

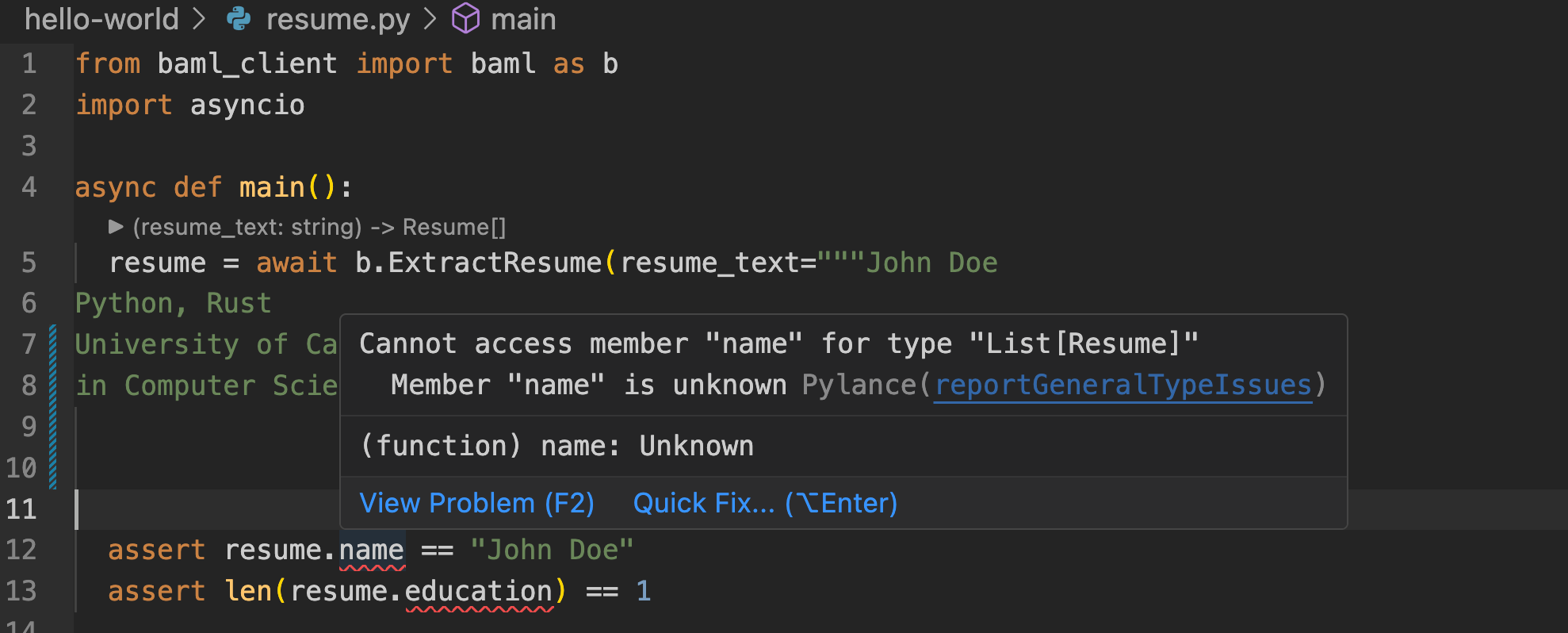

6. Type Safety Everywhere

BAML generates fully typed clients for all languages automatically

See how changes instantly update the prompt:

Change your types → Prompt automatically updates → See the difference immediately

7. Advanced Streaming with UI Integration

BAML’s semantic streaming lets you build real UIs with loading bars and type-safe implementations:

What this enables:

- Loading bars - Show progress as structured data streams in

- Semantic guarantees - Title only appears when complete, content streams token by token

- Type-safe streaming - Full TypeScript/Python types for partial data

- UI state management - Know exactly what’s loading vs complete

See semantic streaming in action - structured data streaming with loading states

The Bottom Line

You started with: A simple LLM call You ended up with: Hundreds of lines of infrastructure code

With BAML, you get:

- The simplicity of your first attempt

- All the production features you built manually

- Better reliability than you could build yourself

- 10x faster development iteration

- Full control and transparency

BAML is what LLM development should have been from the start. Ready to see the difference? Get started with BAML.