Comparing Langchain

Langchain is one of the most popular frameworks for building LLM applications. It provides abstractions for chains, agents, memory, and more.

Let’s dive into how Langchain handles structured extraction and where it falls short.

Why working with LLMs requires more than just Langchain

Langchain makes structured extraction look simple at first:

That’s pretty neat! But now let’s add an Education model to make it more realistic:

Still works… but what’s actually happening under the hood? What prompt is being sent? How many tokens are we using?

Let’s dig deeper. Say you want to see what’s actually being sent to the model:

But even with debug mode, you still can’t easily:

- Modify the extraction prompt

- See the exact token count

- Understand why extraction failed for certain inputs

When things go wrong

Here’s where it gets tricky. Your PM asks: “Can we classify these resumes by seniority level?”

But now you realize you need to give the LLM context about what each level means:

Your clean code is starting to look messy. But wait, there’s more!

Multi-model madness

Your company wants to use Claude for some tasks (better reasoning) and GPT-4-mini for others (cost savings). With Langchain:

Testing nightmare

Now you want to test your extraction logic without burning through API credits:

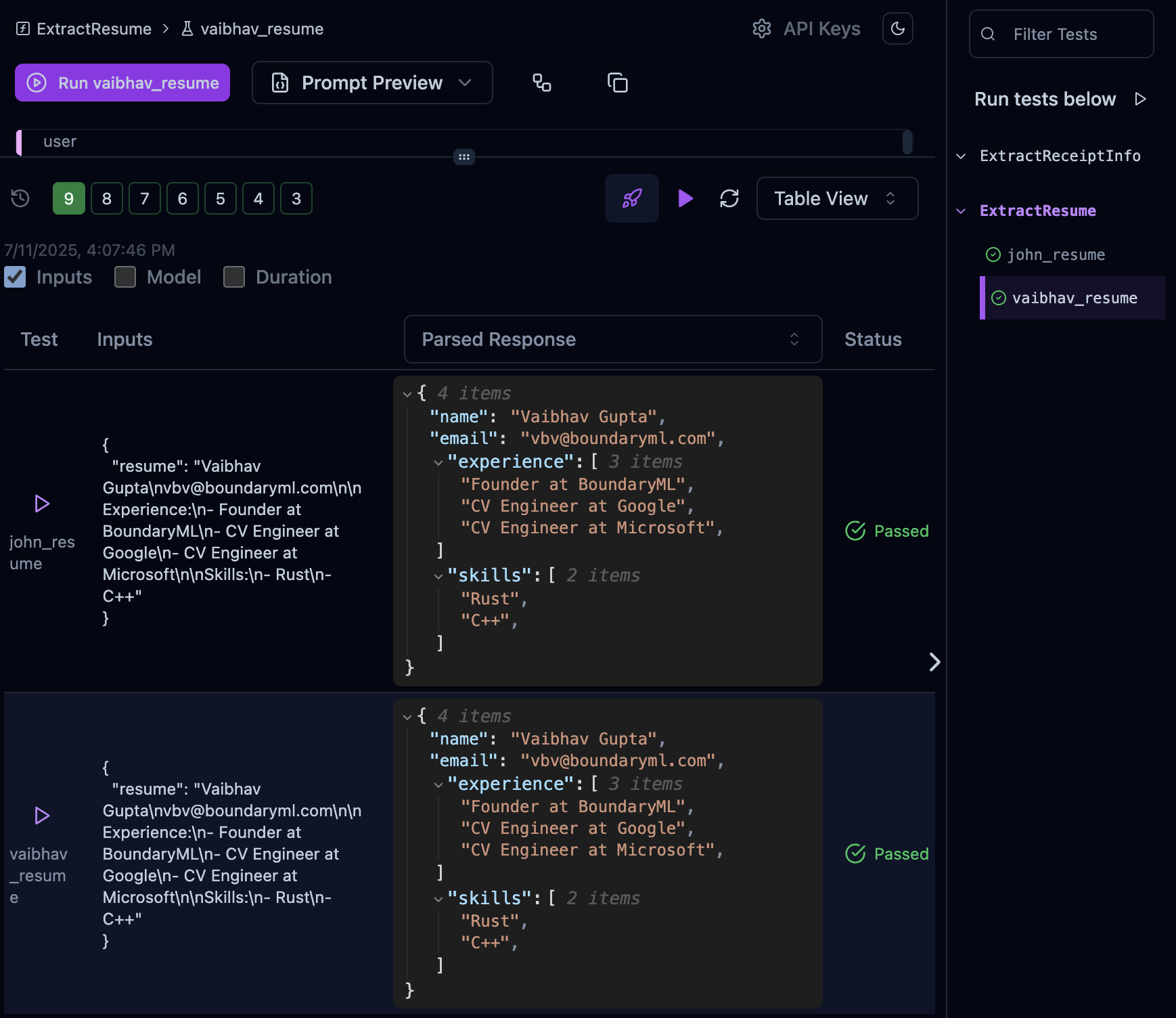

With BAML, testing is visual and instant:

Test your prompts instantly without API calls or mocking

The token mystery

Your CFO asks: “Why is our OpenAI bill so high?” You investigate:

But you still don’t know WHY it’s using so many tokens. Is it the schema format? The prompt template? The retry logic?

Enter BAML

BAML was built specifically for these LLM challenges. Here’s the same resume extraction:

Now look what you get:

- See exactly what’s sent to the LLM - The prompt is right there!

- Test without API calls - Use the VSCode playground

- Switch models instantly - Just change

client GPT4toclient Claude - Token count visibility - BAML shows exact token usage

- Modify prompts easily - It’s just a template string

Multi-model support done right

Use it in Python:

The bottom line

Langchain is great for building complex LLM applications with chains, agents, and memory. But for structured extraction, you’re fighting against abstractions that hide important details.

BAML gives you what Langchain can’t:

- Full prompt transparency - See and control exactly what’s sent to the LLM

- Native testing - Test in VSCode without API calls or burning tokens

- Multi-model by design - Switch providers with one line, works with any model

- Token visibility - Know exactly what you’re paying for and optimize costs

- Type safety - Generated clients with autocomplete that always match your schema

- Schema-Aligned Parsing - Get structured outputs from any model, even without function calling

- Streaming + Structure - Stream structured data with loading bars and type-safe parsing

Why this matters for production:

- Faster iteration - See changes instantly without running Python code

- Better debugging - Know exactly why extraction failed

- Cost optimization - Understand and reduce token usage

- Model flexibility - Never get locked into one provider

- Team collaboration - Prompts are code, not hidden strings

We built BAML because we were tired of wrestling with framework abstractions when all we wanted was reliable structured extraction with full developer control.

Limitations of BAML

BAML does have some limitations we are continuously working on:

- It is a new language. However, it is fully open source and getting started takes less than 10 minutes

- Developing requires VSCode. You could use vim but we don’t recommend it

- It’s focused on structured extraction - not a full LLM framework like Langchain

If you need complex chains and agents, use Langchain. If you want the best structured extraction experience with full control, try BAML.