Comparing AI SDK

AI SDK by Vercel is a powerful toolkit for building AI-powered applications in TypeScript. It’s particularly popular for Next.js and React developers.

Let’s explore how AI SDK handles structured extraction and where the complexity creeps in.

Why working with LLMs requires more than just AI SDK

AI SDK makes structured data generation look elegant at first:

Clean and simple! But let’s make it more realistic by adding education:

Still works! But… what’s the actual prompt being sent? How many tokens is this costing?

The visibility problem

Your manager asks: “Why did the extraction fail for this particular resume?”

You start digging through the AI SDK source code to understand the prompt construction…

Classification challenges

Now your PM wants to classify resumes by seniority level:

But wait… how do you tell the model what “junior” vs “senior” means? Zod enums are just string literals:

Your clean abstraction is leaking…

Multi-provider pain

Your company wants to use different models for different use cases:

Testing without burning money

You want to test your extraction logic:

The real-world spiral

As your app grows, you need:

- Custom extraction strategies for different document types

- Retry logic for flaky models

- Token usage tracking for cost control

- Prompt versioning for A/B testing

Your code evolves into:

The simple AI SDK call is now buried in layers of infrastructure code.

Enter BAML

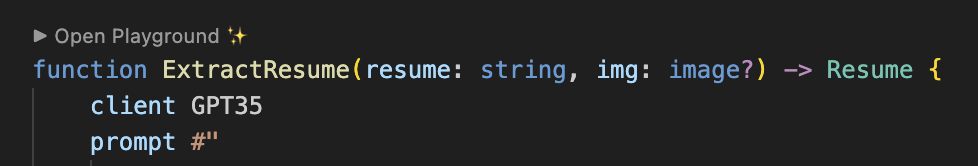

BAML was designed for the reality of production LLM applications. Here’s the same resume extraction:

Notice what you get immediately:

- The prompt is right there - No digging through source code

- Enums with descriptions - The model knows what each value means

- Type definitions that become prompts - Less tokens, clearer instructions

Multi-model made simple

Use it in TypeScript:

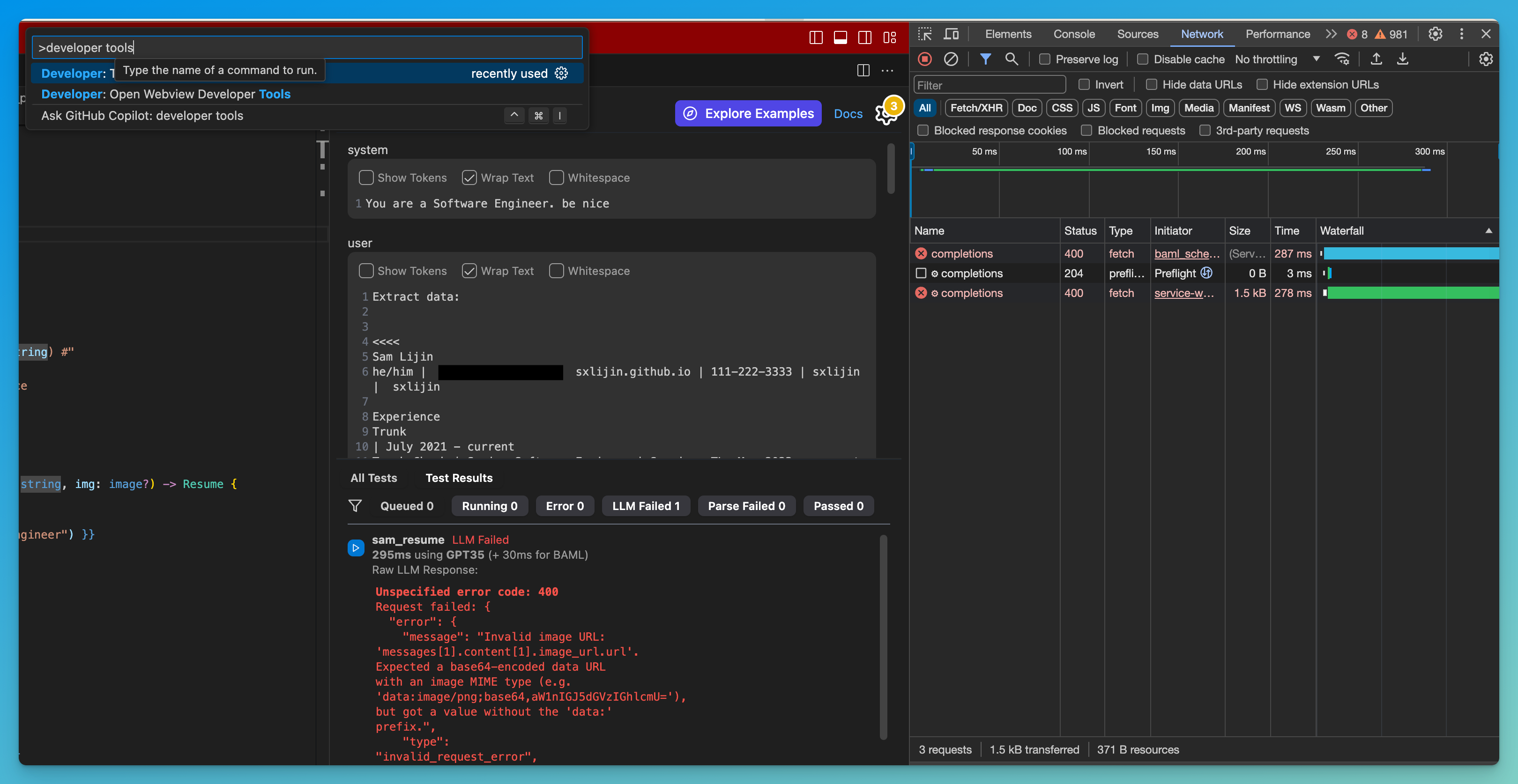

Testing that actually tests

With BAML’s VSCode extension, you can:

- Test prompts without API calls - Instant feedback

- See exactly what will be sent - Full transparency

- Iterate on prompts instantly - No deploy cycles

- Save test cases for regression testing

No mocking required - you’re testing the actual prompt and parsing logic.

The bottom line

AI SDK is fantastic for building streaming AI applications in Next.js. But for structured extraction, you end up fighting the abstractions.

BAML’s advantages over AI SDK:

- Prompt transparency - See and control exactly what’s sent to the LLM

- Purpose-built types - Enums with descriptions, aliases, better schema format

- Unified model interface - All providers work the same way, switch with one line

- Real testing - Test in VSCode without API calls or burning tokens

- Schema-Aligned Parsing - Get structured outputs from any model

- Better token efficiency - Optimized schema format uses fewer tokens

- Production features - Built-in retries, fallbacks, and error handling

What this means for your TypeScript apps:

- Faster development - Test prompts instantly without running Next.js

- Better debugging - Know exactly why extraction failed

- Cost optimization - See token usage and optimize prompts

- Model flexibility - Never get locked into one provider

- Cleaner code - No wrapper classes or infrastructure code needed

AI SDK is great for: Rapid prototyping, simple use cases BAML is great for: Production structured extraction, multi-model apps, cost optimization, streaming UIs with semantic streaming

We built BAML because we were tired of elegant APIs that fall apart when you need production reliability and control.

Limitations of BAML

BAML does have some limitations:

- It’s a new language (but learning takes < 10 minutes)

- Best experience requires VSCode

Ready for bulletproof structured extraction with full control? Try BAML.